May 05, 2021

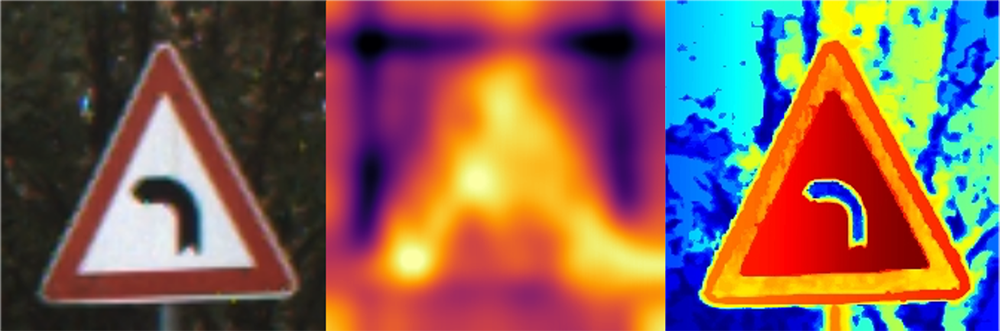

Three sub-images of a traffic sign as viewed by computer vision. The first sub-image is the original observed by the machine through a typical camera. The second and third sub-images are qualitative results from two different algorithms illustrating what is important to the machine about the traffic sign.

Machines have the ability to tell humans why they made a decision based on how they prioritize features of what they see. That’s the take-away from a paper Mizzou Engineers have had accepted to an international conference this summer.

Charlie Veal, a PhD student in computer science, will present the work on evolutionary and neural attention at the Congress on Evolutionary Computation in June. The event, under the umbrella of the Institute of Electrical and Electronics Engineers (IEEE), will be hosted virtually from Poland.

Charlie Veal

In the paper, Veal and co-authors analyzed and compared algorithms and techniques used to explain the decisions behind neural networks — artificial intelligence systems that learn when provided new information.

“This paper establishes criteria for visually explaining computer vision algorithms,” Veal said. “We performed a comparative analysis between neural and evolutionary algorithms and determined which are useful to improve AI transparency and performance.”

Why it Matters

The “why” behind a machine’s decision matters as artificial intelligence becomes more mainstream in technologies such as self-driving cars. If humans are to trust an autonomous vehicle on the road, we need to be assured that the car can make quick decisions based on what it deems important — such as recognizing a person and stopping when a pedestrian walks in front of it.

There are different ways a machine can describe how it makes decisions. In this case, Veal’s team used imagery.

These images, or so-called attention maps, show researchers exactly what a machine sees, highlighting features it deems important.

In the case of a self-driving vehicle, the car’s vision system might detect and prioritize the shape of a traffic sign, but not the content on the sign. That means in some cases, the computer’s cameras aren’t picking up on important information such as speed limit numbers or directional graphics.

“If I want to make a computer vision system better, I need to see what features the machine is looking at that make it succeed or fail,” said Derek Anderson, associate professor of electrical engineering and computer science and a co-author of the paper. “Or, if there are objects I want it to recognize, I need to provide more examples so the machine knows what features are important.”

By knowing what additional information to provide the machine, developers can improve decision-making processes.

“If we can understand the best way to quantitatively express what a machine finds important about a specific problem, that’s explainability and then we can know how to best use these systems,” Veal said. “A machine needs a way to explain back to humans or express why its decisions are meaningful and why we can trust it.”

Co-authors on the paper include Scott Kovaleski, professor of electrical engineering and computer science; Marshall Lindsay, a PhD student; and Stanton Price with the U.S. Army Engineer Research and Development Center (ERDC).