September 15, 2021

In theory, augmented reality (AR) has the potential to transform online learning, allowing students to interact with virtual components in their own physical settings. In reality, however, the technology falls short. Now, a Mizzou Engineering team has devised a way to improve AR so that it can be better used to enhance educational experiences.

Unlike virtual reality — which immerses a user entirely in a simulated environment — AR superimposes virtual objects over the user’s real environment through goggles or a smartphone screen.

So far, though, AR hasn’t proven to be effective in engineering educational settings. Previous studies have shown that students lose attention or feel uncomfortable with complex augmented materials. That’s because the virtual components don’t always show up at the right place at the right time, said Jung Hyup Kim, an associate professor in the Department of Industrial and Manufacturing Systems Engineering.

“Everybody expects that if you wear AR glasses, the physical objects will be harmonized with the virtual objects perfectly without any flaws,” he said. “But there are a lot of gaps between the computer-generated 3D images and the real objects. If you want to learn something through AR, you might be more confused because the 3D images appear in different positions.”

To combat those issues, Kim and Wenbin Guo — who earned his PhD in industrial engineering at Mizzou — developed a more advanced AR system that uses indoor GPS tracking to better position objects with the user’s physical space. They tested the system using engineering materials, such as interactive modules and lectures notes.

“Students wore a HoloLens to see AR modules and engineering content,” said Guo, now a post-doctoral associate at the University of Florida. “We also had technology to locate their position in the environment, so each position would match one of the modules showing engineering content.”

Instead of a student having to find or pull up individual augmented components, an entire lesson plan could be spread out within a user’s view.

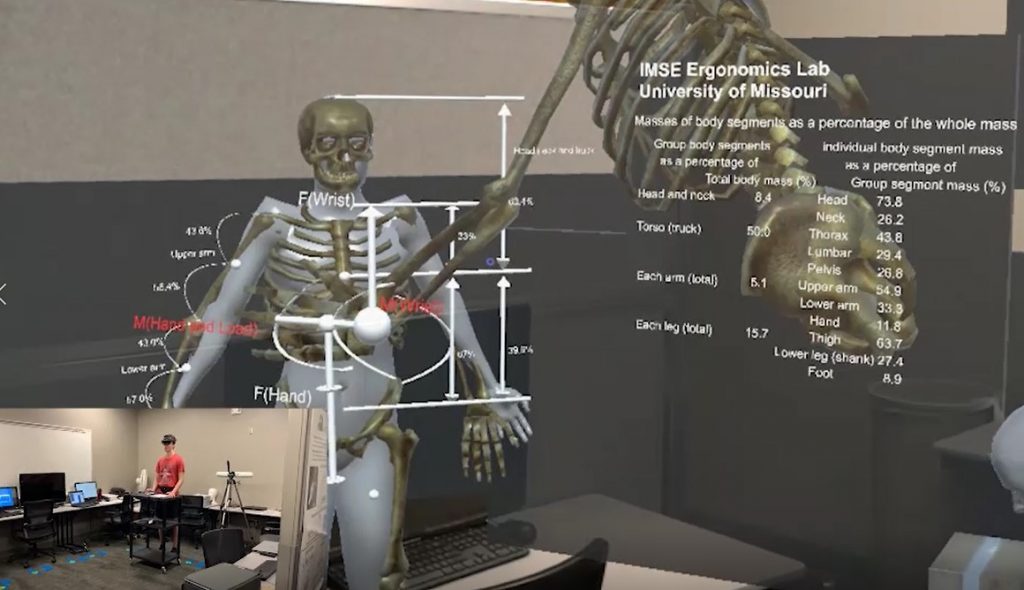

For instance, a student studying the efficiency of human body movements in the workplace could look to their left and see a life-sized diagram of a skeleton, which would be overlayed on top of the user’s actual surroundings.

As that person turned their head to the center, they could see a human figure picking up a box, as well as seeing the movement of the skeletal system as it performs that task. As the student turns to the right, virtual screens displaying information about the lecture would appear.

“Based on the student’s location, he knows what he should learn, and if he needs to review previous content, he can move to the previous position to see that the material again,” Kim said.

By tracking the person’s location and movements, researchers were able to reduce the amount of time it took students to get from one educational component to another and improved the user’s experience.

Guo and Kim outlined their findings at the International Conference on Applied Human Factors and Ergonomics this summer, but the work is ongoing.

“We have to get through a lot of hurdles to improve AR learning environments,” Kim said. “To make a more user-friendly system, we must understand how students will respond to the AR environment. To do that, we need to collect more data, such as eye tracking to see where students are paying more attention when they are exposed to the computer-generated 3D images.”

AR is a long way from being a perfect platform, he said, but the location tracking is a step in the right direction toward innovative remote learning experiences.

“If we can successfully develop the advanced AR learning platform, students who cannot come to campus will be able to access learning materials and labs and experience engineering content just like students who are on Mizzou campus,” Kim said.