October 25, 2021

A Mizzou Engineering team is hoping to lead artificial intelligence (AI) into a new era by foregoing real-world data in favor of simulated environments.

“State-of-the-art AI is based on a 70-year-old combo of neural networks and supervised learning. This approach is flatlining, in part, because it’s not scalable,” said Derek Anderson, associate professor of electrical engineering and computer science. “We are at a technological convergence point where simulation software, content and computing resources are available and can be used to make photorealistic computer-generated imagery that can fool a human and can be used to train AI.”

Artificial neural networks — the current reigning champion of AI — were first discovered in the 1940s, but at the time researchers couldn’t do much with them partially due to a lack of computing resources and access to training data. Technological advances over the last decade have made it possible to now train limited functionality neural networks on a specific domain. However, a major bottleneck remains, in that it’s difficult to get large amounts of diverse, accurately labeled data to train general-purpose AI.

First, getting AI to recognize an object currently requires someone to collect and label thousands or millions of images to feed into a network – a process referred to as supervised learning. While it’s possible to get a lot of data from video footage captured by a drone, someone still must go through each frame and label objects in the image before a computer can learn to detect them. While there are alternative emerging theories, state-of-the-art object detection and localization needs labeled data.

The other problem with real-world imagery is that it doesn’t provide “ground truth,” or data that is completely true. Labels are spatially ambiguous, material properties are not always recorded, and shadows are not annotated. Without that ground truth, researchers do not know where AI works or breaks.

“Getting data from the real world is problematic,” Anderson said. “We collect a lot of data we don’t know much about, and we’re trying to use that to train AI. It’s not working.”

These are a few of the reasons why Anderson’s team in the Mizzou Information and Data Fusion Laboratory (MINDFUL) have begun using gaming components, instead.

In a simulated world, researchers can manipulate environments to train the computer to recognize an object or learn a behavior in any number of scenarios. And they’ve found that they can collect and label data in weeks as opposed to years.

“Deep learning is state-of-the-art right now in computer vision, but in the real world, you get poor or no ground truth for a lot of the things we want to know,” said Brendan Alvey, a PhD candidate working in the MINDFUL Lab. “And it takes a lot of time and money to collect and label the data. Simulation makes it a lot easier.”

The team is in good company. Tech giants such as Google’s DeepMind and Tesla are also starting to improve machine learning applications through use of simulated data.

Reproduceable Research

Anderson became interested in using gaming components as a way to train AI out of necessity.

When COVID-19 grounded his research using drones to detect explosive hazards, perform aerial forensic anthropology and investigate human-drone teaming using augmented reality, Anderson — who taught game design 15 years ago — turned to open-source and commercially free to academia software tools for a solution.

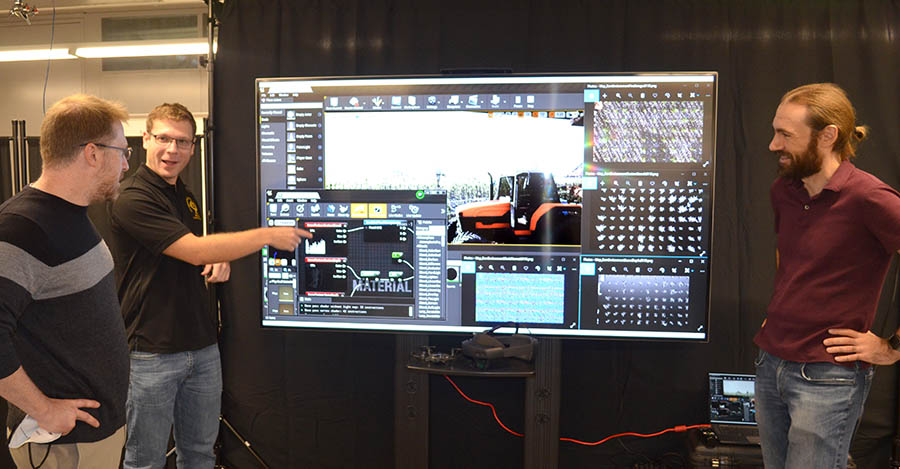

Specifically, the team is using Unreal Engine, a suite of development tools that gives users components to create photorealistic virtual worlds, the Unreal Marketplace, Turbosquid and Quixel, which provides a library of 3D scanned assets.

“One of the advantages is how photorealistic the content is,” said Andrew Buck, assistant research professor in electrical engineering and computer science. “The quality of the texture and lighting on these virtual 3D objects makes it almost as good as the real thing, but we get all the benefits of simulation.”

From the simulated scenes, the computer can automatically label objects in a scene. For instance, in an agricultural context, a drone in the Unreal Engine will automatically fly over a crop field at a specified altitude and capture RGB imagery with associated depth and labels per pixel to train object detection, semantic segmentation, or passive ranging AI algorithms.

The team can extract other information, too, such as 3D point clouds — or sets of data points — to get coordinates and turn two-dimensional objects into 3D models.

“With real-world imagery, data such as depth, especially at range, is difficult to get,” Alvey said. “With simulation, you can get an abundance of data.”

Alvey and Buck are using accurate range ground truth to train machines to predict depth in a single image and detect objects better with respect to this knowledge.

The research team is also combining simulation and real-world data in creative ways. For example, Alvey showed how to collect a large and uniformly sampled aerial data set in the Unreal Engine. He then used an AI model trained on real-world data on the simulation data. The result is visualization of AI blindspots, a graphical approach to explainable AI. This can help us identify weaknesses in an AI or AI model and direct future simulation or real-world data collections to train a more reliable model.

The research team has released several papers around the work, and Alvey and Buck presented the research at the International Conference on Computer Vision last month.

They are now releasing a series of tutorials about how they design simulations to collect data, as well as any coding other researchers might need to mimic their work.

“It’s reproduceable research,” Anderson said. “We’re not just publishing a paper, we’re releasing datasets, codes and providing training videos alongside the paper.”

The research team believes the work could revolutionize machine learning.

“High performance computing and data changed the face of AI a decade ago,” Anderson said. “It brought us out of a dry spell. We believe that the recent convergence around generating photorealistic data with ground truth could be a next leap in AI.”

“The ability to capture data in simulation and fully control AI parameters and then apply that to the world is really valuable,” Buck said. “We can do all sorts of interesting things with this data, and I’m excited to see what others can do with this approach.”

The papers, video tutorials and other resources can be found on the MINDFUL Lab website.