November 29, 2021

A Mizzou Engineering team has designed a physics-based model to automatically detect shadows in large-scale aerial images — a development that could lead to improvements in self-driving cars, drones and autonomous robotics. The research team published and presented their findings at the International Conference on Computer Vision (ICCV) Workshop on Analysis of Aerial Motion Imagery (WAAMI) in October.

Shadows are important because they change how objects look. While humans can make sense of shadows, artificial intelligence (AI) cannot easily detect objects in shadows unless it’s trained to do so. Currently, some self-driving vehicles compensate for that by using lidar, which sends out laser beams to create three-dimensional representations of the surrounding environment.

“Algorithms aren’t as capable as people, so it needs to be able to predict, ‘this car, when it drives into a shadow, looks totally different than it does outside of the shadow,’” said Joshua Fraser, a research scientist in electrical engineering and computer science (EECS). “Our computer vision-based trackers can’t do as well unless the algorithms have additional context and anticipate, ‘you’re going into a shadow.’ This is where shadow detection comes in. It’s expensive to render and figure out where the shadows lie, so the idea is if we can train a neural network to predict the presence of shadows, then we can do it much more efficiently without having to build complete 3D models.”

Training deep neural networks requires large amounts of data. Labeled pixels are fed to the deep learning algorithm, which then learns to recognize certain features—in this case, shadows. However, shadows are especially tricky because they change in size and shading darkness depending on where the sun is in relation to ground objects. There are also the effects of global illumination due to atmospheric scattering of light, light reflecting off objects, multiple cast shadows and other effects that produce shadows of different shades.

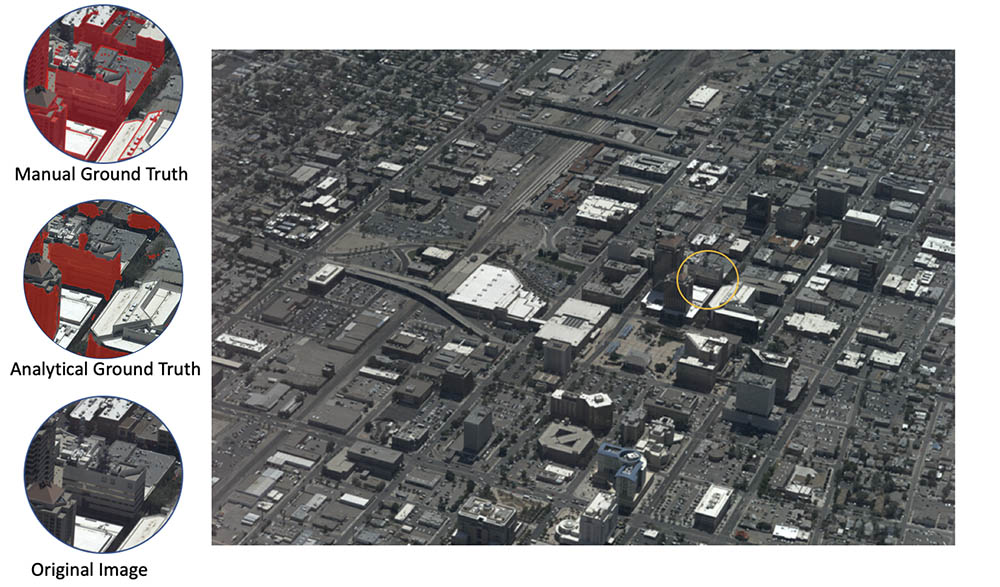

To train the neural network to recognize shadows, the group used aerial images of downtown Albuquerque, New Mexico, and Columbia, Missouri. The data set was unique in that it provided images at an altitude between low-flying drone images and high resolution satellite images, Fraser said. But unlike satellite images that depict scenes looking straight down, the city scaled images were collected from all angles, providing 360-degree representation of cities and more continuous temporal coverage.

The team used those images to create accurate 3D point cloud reconstructions of the urban environment that can then be used in a game-engine or similar simulation environment to render shadows, generating accurate shadow masks to train the AI system.

“It is a complex pipeline,” said Deniz Kavzak Ufuktepe, a PhD student in computer science and first author on the paper. “First, we have large wide area city scale images, followed by 3D reconstruction. Then we apply a physics-based algorithm to predict the shadow masks analytically. We use these masks as ground-truth labels to train a deep learning neural network.”

One stage of the shadow detection pipeline was calculating the solar angles at specific times of day, an initial step that Ekincan Ufuktepe, an instructor of electrical engineering and computer science, provided. He modified an open source algorithm that factored in not only data from the images but also metadata such as latitude, longitude and time zone in order to calculate the sun’s position to predict shadows.

“For astronomical calculations, you have to know the time accurately and you have to know the observer’s viewing geometry, spherical coordinates with respect to a local coordinate system in order to get the Sun’s path on the celestial sphere which varies with date,” said Kannappan Palaniappan, EECS professor and director of the Computation Imaging & VisAnalysis Lab.

Jaired Collins, a PhD student in computer science, wrote software to calculate solar angles in a scalable distributed way in order to automate the system so it can be used for aerial images collected over other cities.

That’s important because it allows us to apply parallel processing to scale the project, something that cannot be done manually for large city scale applications. In the Albuquerque dataset for example, the physics-based approach allowed us to label billions pixels in a fully automated way which otherwise would require many person years of manual labeling effort, Palaniappan said.

The CIVALab team is also combining their real-world data with simulated environments, which will allow them to adjust the sun’s position based on time of day and season for different cities.

“We’re limited in some of our aerial data collections on a specific day for five to ten minutes or so,” Palaniappan said. “So the solar angle is fixed and the shadows are mostly fixed. In a simulated environment, we have greater flexibility to adjust the sun’s position in the sky based on geographic location and where it would be at different times of day and different times of the year. This enables rich data augmentation and supervision for deep learning with known shadow regions to create a simulation environment with real-world models blended with photorealistic simulators.”

PhD student Timothy Krock was also a co-author of the paper and worked on manual annotations for validating the shadow detection pipeline.

Filiz Bunyak, EECS assistant research professor, and Co-Chair of the ICCV WAAMI organizing committee, has used the model and praised the team for helping automate the process.

“Shadows are an important aspect of video analytics for detection of objects that will be analyzed and tracked,” she said. “The team did a great job developing a physics-based system that gives you exact boundaries of shadows without labor intensive processes. As a computer vision end user, this will have a really high impact on aerial image analysis.”

The research was partially funded by Army Research Laboratory cooperative agreement W911NF-1820285 and Army Research Office DURIP W911NF-1910181.