September 29, 2022

Augmented reality (AR) has the potential to bring coursework to life. Imagine, for instance, learning about supply chains by seeing the various components of an operation laid out in front of you, from the manufacturing plant to the delivery site.

Jung Hyup Kim, an associate professor of industrial engineering, is exploring how best to incorporate AR technology into engineering curriculum. He’s the Principal Investigator on a National Science Foundation grant that is allowing him to design and test AR lessons in a new lab in Lafferre Hall.

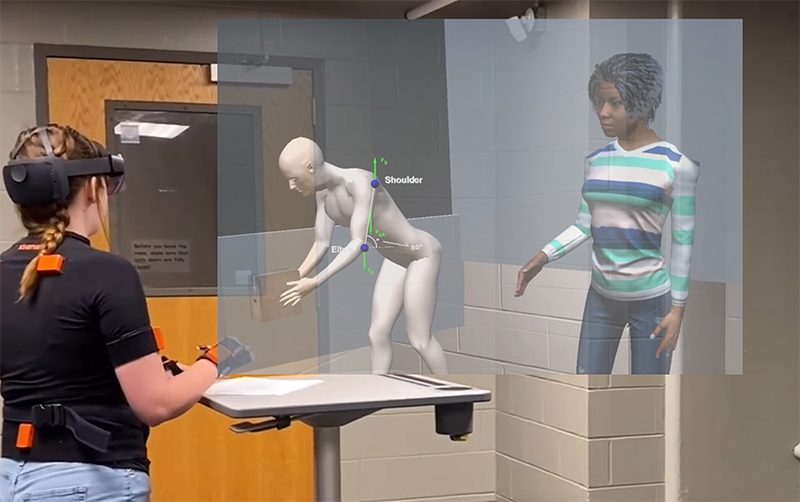

Specifically, Kim is researching the use of real-time tracking and eye-tracking technologies for integrating AR into undergraduate engineering labs.

Unlike virtual reality, which immerses a user into a simulated setting, AR superimposes virtual objects on top of a real-world environment.

The technology has not yet been widely adopted in education, in part because it has technical challenges. Previous studies have shown that students lose attention or aren’t comfortable with complex augmented materials. Oftentimes, the digital components don’t show up at the right place, or they don’t display correctly. In an educational setting, those types of flaws can cause distractions and confusion.

Last year, Kim conducted a pilot study of AR to address those technical challenges. He found that using a location tracking device helped better align augmented content with the physical environment.

In the new lab, the first objective is to integrate real-time 3D motion and location tracking systems to improve student engagement. The GPS-based system would have the ability to determine where a student is and respond based on the student’s posture. This will ensure students see appropriate images in the right spots so they can understand the context of the digital assets.

Another objective, Kim said, is to track eye movements and metacognition to determine whether a student is actually paying attention and grasping the concepts.

“That’s one of the challenges of online education — students may watch a video, but we have no idea if they understood it or not,” Kim said. “In order to prevent that, we’re developing mechanisms to analyze eye tracking images while students are watching AR models and images. Based on eye gaze movement and where they are looking, we will know whether they are paying attention or not. If they are not paying attention or it looks like a student isn’t learning, we’ll have to reengage or do something to help them learn the content.”

The third objective of the lab is to launch an exploratory study testing AR learning application in an engineering lab based on feedback and performance.

In Kim’s new lab, students will have a series of stations with different AR experiences. They will stand at a certain spot to complete a module before proceeding to the next. Once they complete the tasks at all stations, students will be tested to ensure they understood the concepts.

Once the research team has worked out the kinks, the AR system could be integrated into courses across Mizzou Engineering.

“We could change content to be used in other classes,” Kim said. “Whatever system we come up with, we can apply anywhere.”

Be part of the next generation of engineering education at Mizzou. Apply today.