March 08, 2021

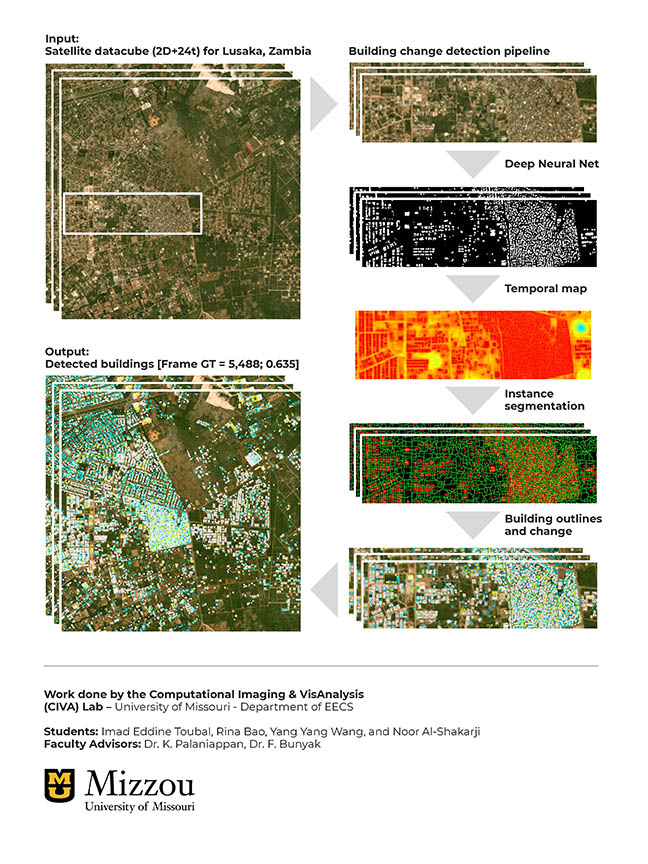

A group of four computer science PhD students in the Computational Imaging and VisAnalysis (CIVA) Lab: Rina Bao, Imad Toubal, YangYang Wang and Noor Al-Shakarji at Mizzou Engineering took the top spot in the graduate student category at the 24th Conference on Neural and Information Processing Systems (NeurIPS2020) SpaceNet 7 competition. SpaceNet 7 challenge was about identifying and tracking building changes in 101 cities around the world using medium resolution (4m) satellite imagery. SpaceNet7 was organized by In-Q-Tel CosmiQ Works, Amazon Web Services, Maxar Technologies, Planet, Capella Space, IEEE GRSS and National Geospatial-Intelligence Agency (NGA). NeurIPS is one of the top artificial intelligence (AI) conferences. When all scores were calculated and reviewed, the team took 8th place overall among more than 300 entries and was the top ranked US team.

What makes this noteworthy is the steep complexity of the Multi-Temporal Urban Development Challenge, which started with a baseline model that had an accuracy of just 17 percent, said Kannappan Palaniappan, professor of Electrical Engineering and Computer Science and the faculty advisor for the team. Tracking and localizing individual building construction across the globe is useful for advancing geospatial foundational mapping at scale using tip-and-cue overhead imagery, with applications in humanitarian and disaster response planning, population dynamics, land use mapping and urban modeling.

The challenge required participants to identify changes in buildings for assessing urbanization at 101 unique geographies covering 41,250 square kilometers. The monthly satellite imagery was collected by Planet Labs’ Dove constellation between 2017 and 2020. Specifically, the team had to pinpoint where and when buildings were demolished or built in a city over a two-year time span by identifying building footprints that were on average just eleven pixels in size. SpaceNet7 provided around 24 images for each geographical location—one satellite photo per month over the two years. And the imagery was not high resolution: each pixel sampled four meters, or about 13 feet of ground distance.

“It was probably one of the most complicated challenges our lab has worked on because of the sheer volume of spatial-temporal data, extremely small object size and high density of buildings in medium resolution satellite imagery, coupled with clouds, haze and dramatic seasonal changes in vegetation that is a background distractor, compared to the more subtle changes in building appearance” Palaniappan said.

Against the Odds

The odds weren’t in Mizzou’s favor. This was the team’s first time to enter a SpaceNet challenge. Other teams included experienced participants from high-tech companies, and other universities that had placed in the top five in previous SpaceNet challenges.

Compared to their competition, Mizzou had limited high performance computing resources—downloading the satellite imagery dataset, organizing it, visualizing the 4km x 4km data cubes, running an initial baseline deep learning model and submitting it to the challenge website, in the right format for scoring took five days. That was a significant amount of time considering the team entered the challenge just one month before the deadline.

COVID-19 didn’t help, either. Because students couldn’t work together in person, they each used different approaches and computing frameworks as part of a workflow pipeline to tackle various parts of the building change detection problem. In the end to meet reproducibility requirements, this required one team member, Yang Yang Wang, to convert several components coded in Matlab to Python.

“With COVID, it was hard to collaborate because people were working remotely,” she said. “And we had a short time frame. So it was really amazing that we could accomplish this.”

Rina Bao focused on the building instance detection side—how to train the deep learning network to distinguish individual buildings that were so close together and small that their building footprints looked like one in the satellite images. “Most of the top approaches used an ensemble of four to ten models to improve accuracy,” she said. “But we used only one deep model combined with a unique tracking module to handle multiscale buildings, just like the winning team from Baidu that used a single HRNet model, which sped up training and inference times.”

Noor Al-Shakarji had an algorithm she was previously using to track other objects, such as cells and vehicles. But she discovered quickly that it didn’t scale with the satellite imagery.

“I’m used to working with 100 to 500 objects in each video frame, but in this case, it was more like 4,000 to 40,000 building objects in each satellite image frame,” she said. “It was very, very challenging.”

Practical Applications

Testing the scalability and adaptability of our current deep learning algorithms across different disciplines and applications was one reason the team entered the competition, Palaniappan said. All of the students are involved in doctoral research requiring developing real world large scale computer vision and AI capabilities for autonomous systems.

“Even though satellite imagery is not something we have been working on recently, it is representative of the need for fast geospatial foundational mapping, and helps us to better understand our computer vision scene perception algorithms, to make them more robust, ruggedized, resilient and scalable,” he said. “AI and deep learning is reinventing the art of the possible. It’s what everyone across many fields are excited about, and the reason that there are so many open challenges using difficult to acquire and expensive to ground-truth datasets. It broadens community participation, whether it’s developing AI in medicine for clinical diagnostic applications or in national security and defense for situational awareness applications.”

The team used a hybrid approach with deep learning AI and machine learning to track and detect buildings, combined with computer vision and statistical methods to further improve upon their change analysis results, said Filiz Bunyak Ersoy, an assistant research professor and co-advisor to the group.

“What the team did was process individual images using AI and machine learning techniques, then they integrated the building likelihood maps, using not just single image information, but using temporal persistence information across multiple months to enhance change detection,” she said.

Tracking building changes over time from satellite imagery has several practical applications. It can help communities better plan for or respond to disasters; assist with environmental monitoring and land use change; provide a global characterization of urbanization and population growth; and develop sustainable infrastructure for smart cities.

“One of the applications for building detection is for companies trying to create and update maps of the world,” Imad Toubal said. “Google Maps, Microsoft Flight Simulator and Open Street Map are examples of mapping applications, but they rely on people manually adding or correcting buildings. Having an algorithm that automatically and intelligently detects and segments buildings would be really helpful and would cut a lot of the manual work being done. This would automate a lot of that using high resolution satellite imagery and provide a more reliable mapping system, especially for remote locations.”

ARL Regional Lead, Dr. Mark Tschopp congratulated the University of Missouri CIVALab team and was supportive of their effort.

“It’s very rewarding to see that collaborative partnerships with the Army and DOD in the area of artificial intelligence and machine learning for the Video Analytics and Image Processing for Multiview Scene Understanding project have translated to our partners placing as the top ranked US team in this worldwide competition,” Dr. Tschopp said.

Dr. Raghuveer Rao, the ARL’s Cooperative Agreement Manager for Mizzou’s Army-funded project, echoed the sentiment.

“I offer my hearty congratulations to Dr. Palaniappan and the students,” Dr. Rao said. “The problem addressed is relevant to Army reconnaissance and related applications, and we look forward to further exciting results from the Mizzou team.”

The team will apply what they’ve learned in SpaceNet 7 to Army Research Laboratory projects, including multiple object tracking in video, building three-dimensional point clouds at city scale and drone-based mapping and navigation.